Hand Interfaces: Using Hands to Imitate Objects in AR/VR for Expressive Interactions

AR and VR create exciting new opportunities for people to interact with computing resources and information. Less so exciting is the need for holding hand controllers, which limits applications that demand expressive readily available interactions. In response, prior research has investigated freehand AR/VR input by transforming user body into interaction medium. While prior work focuses on having users' hands grasp virtual objects, we propose a new interaction technique to have users' hands become virtual objects through imitation, for example, having a thumb-up hand pose to imitate a joystick. We have created a wide array of interaction designs around this idea to demonstrate its applicability in object retrieval and interactivity. Collectively, we call these interaction designs Hand Interfaces. With a series of user studies comparing Hand Interfaces against various baseline techniques, we have collected quantitative and qualitative feedback which indicated that Hand Interfaces are effective, expressive, and fun to use.

Design Rationale

Overall, we believe in several innate advantages of Hand Interfaces, around which we designed our interaction techniques. First, like other free-hand interactions, Hand Interfaces are readily available to users and thus is low-friction in task switching. This is a useful advantage especially in AR scenarios where users often need their hands to perform tasks in the physical world. Additionally, Hand Interfaces offers tactile feedback by nature due to skin contacts. Finally, Hand Interfaces leverages proprioception that improves the precision of 3D manipulations. Compared with conventional free-hand interactions, Hand Interfaces can be more expressive in some cases. For example, techniques that leverage grasping postures to allow users to directly "grasp" virtual objects yield ambiguities in retrieving objects when different objects might have similar grasping postures. This is not uncommon given that common practices improve affordance of everyday objects by adopting universal industry designs. For example, a virtual stapler might have users grasping it in a way same as how users grasp a wand, a fork, a billiard cue, or anything that features a pole-like user interface. In our design process, we aimed to make the most of these advantages for Hand Interfaces. To achieve this, Hand Interfaces, at times, compromised other design considerations such as range of motion and flexibility, comfort, or realism. Therefore, it requires a careful design to balance a wide range of design considerations, the process of which we discuss in this section.

We conducted concept-driven brainstorming with all researchers in this project. Researchers were asked to come up with designs that use hands to imitate objects as user interfaces. Early ideas involved mostly digital interfaces inspired by prior work and conventional computer platforms. Such designs include, for example, using palms as keyboards, making an "O" gesture with the thumb and the index finger as a click wheel, or using the index finger as a slider. Then, ideas generated by researchers quickly evolved into a wide array of 3D objects. We started with common controllers in the real world. Examples include a joystick imitated by a thumbs-up gesture (with the thumb "becoming" the stick), a mouse (with two fingers as the left and right button), and a toggle switch (imitated by the index finger joint). Finally, ideas from researchers opened up to a wider array of objects, which were less of interfaces by design but more-so props users could utilize in AR/VR environments. Examples include tools such as a mug, hourglass, spray can, microphone, pair of scissors, wrench, fork, ladle, lever, binoculars, fishing rod, and pump; musical instruments such as a trumpet, bongo, and kalimba; educational and entertainment props such as a globe, multi-meter, color palette, and wand.

Design Criteria

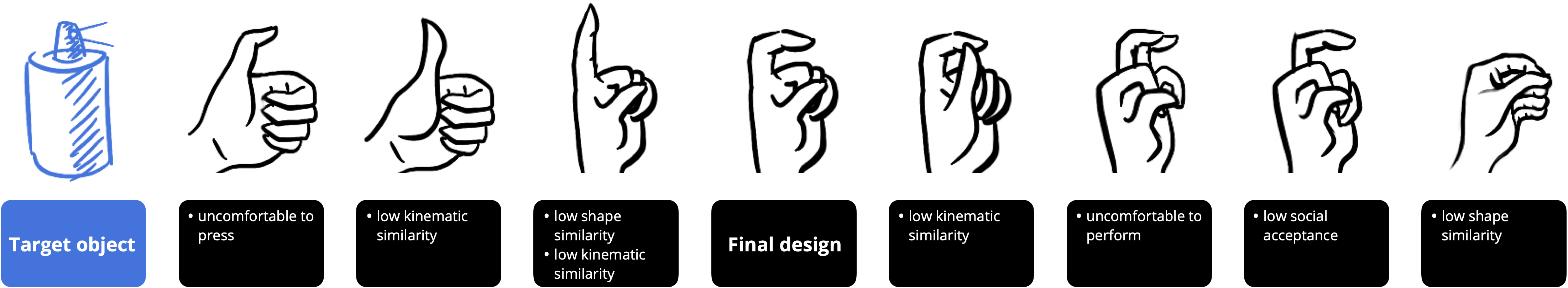

In the course of designing interactions, we leveraged several design considerations in the selection process which we list as follows. We have also shown our design process of the Spray can as an illustrative example.

- The first consideration was shape similarity. We first removed design ideas with hand poses that least resembled the target objects. As we later found in the study, considering shape similarity also contributed to users' perception of realism.

- Another consideration we adopted was kinematic similarity. In this consideration, we estimated how similar the dynamic characteristics (e.g., degree of freedom) of hands and objects were. In the Spray can example, a spray nozzle is meant to be pressed and thus we removed designs that provided no "pressing-in" mechanism. In practice, we found that considering kinematic similarity was critical to the comfort of Hand Interfaces designs.

- We also considered comfort. Specifically, we avoided hand poses that were difficult to perform or uncomfortable to maintain. We also removed designs that felt uncomfortable during interactions when the manipulating hand pressed on the imitating hand.

- Finally, we considered social acceptance. Hand poses should be socially acceptable as AR/VR has a wide range of applications involving multiple users or in public spaces. Therefore, having socially acceptable designs is a prerequisite to success. In the Spray can example, we removed the design with a curled middle finger for the consideration of social acceptance.

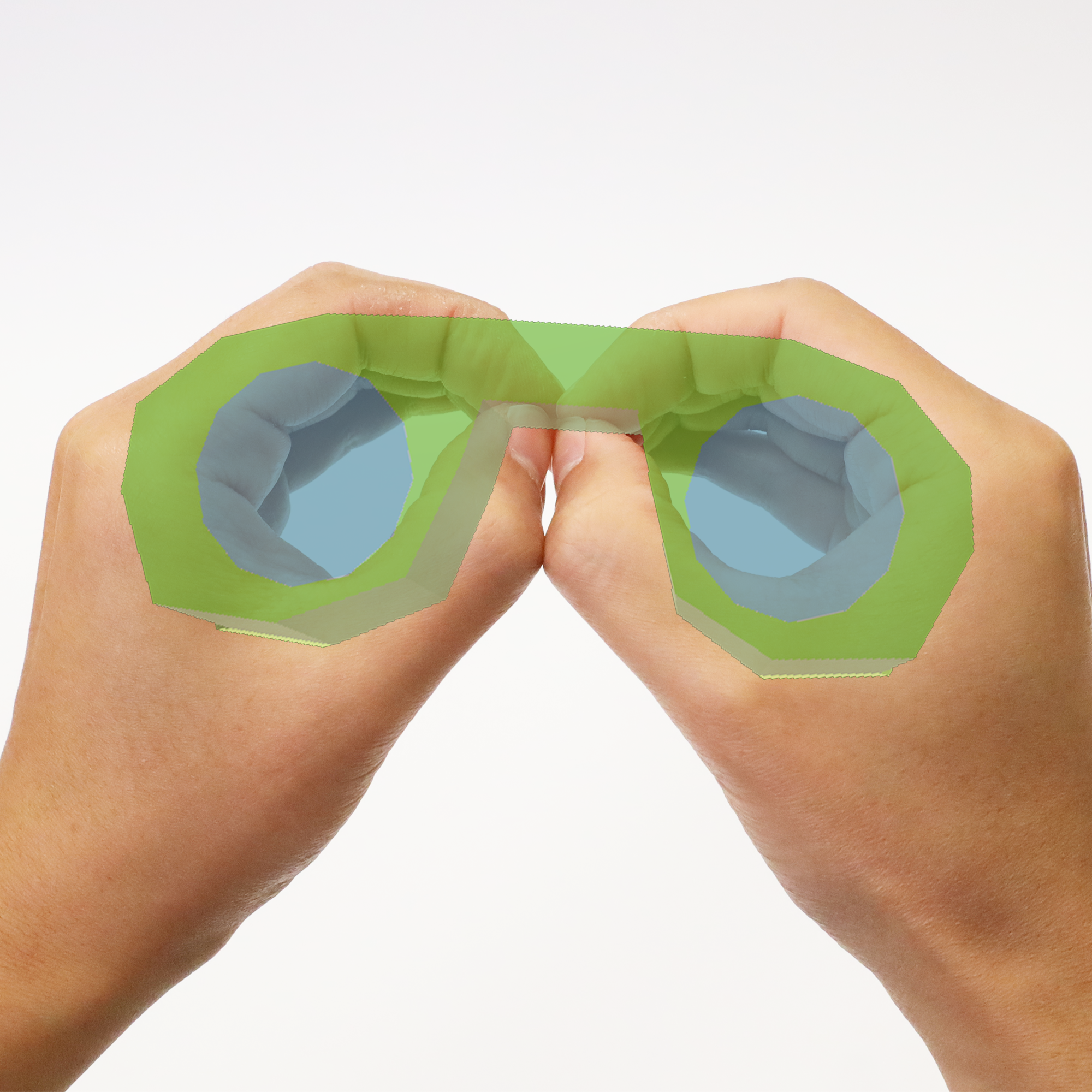

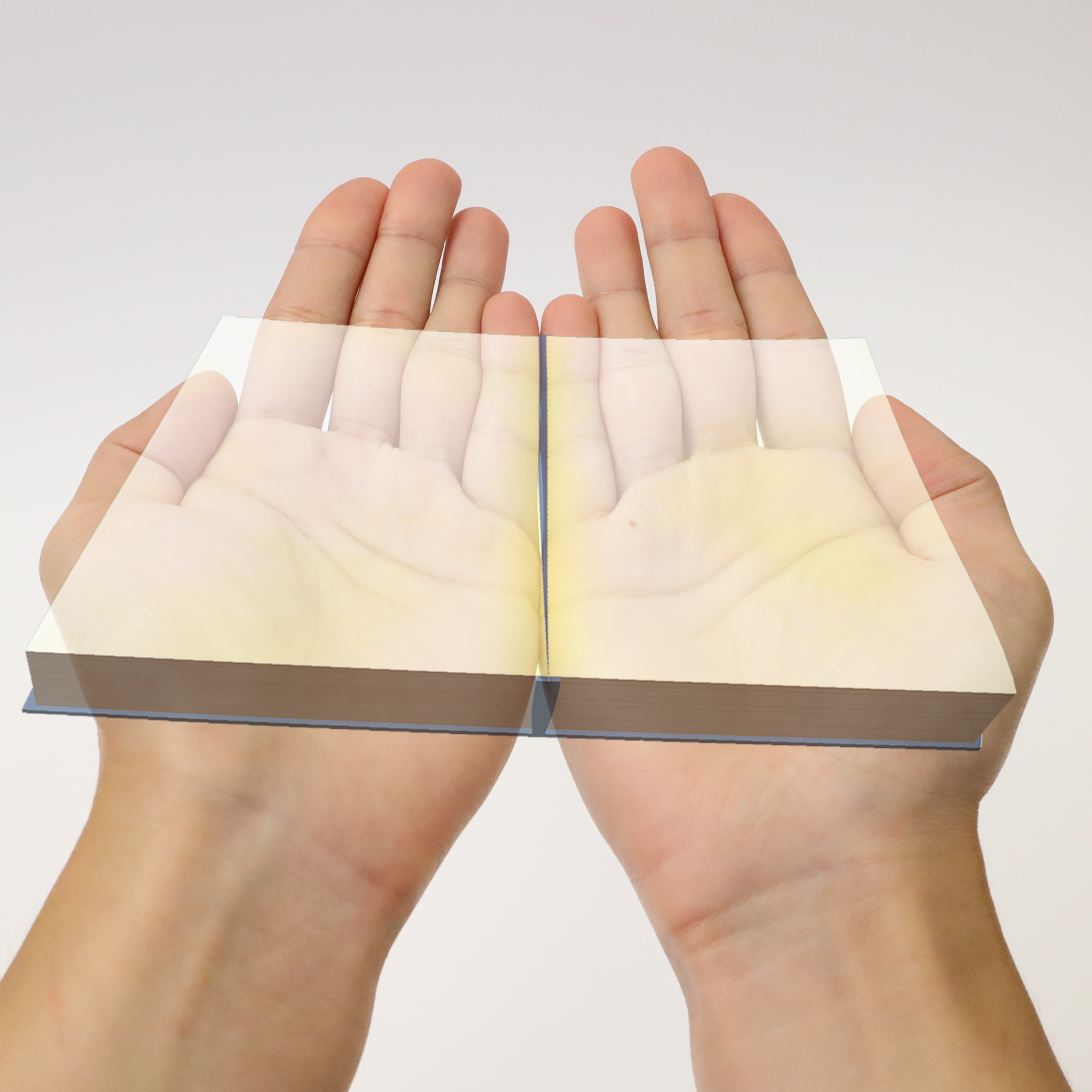

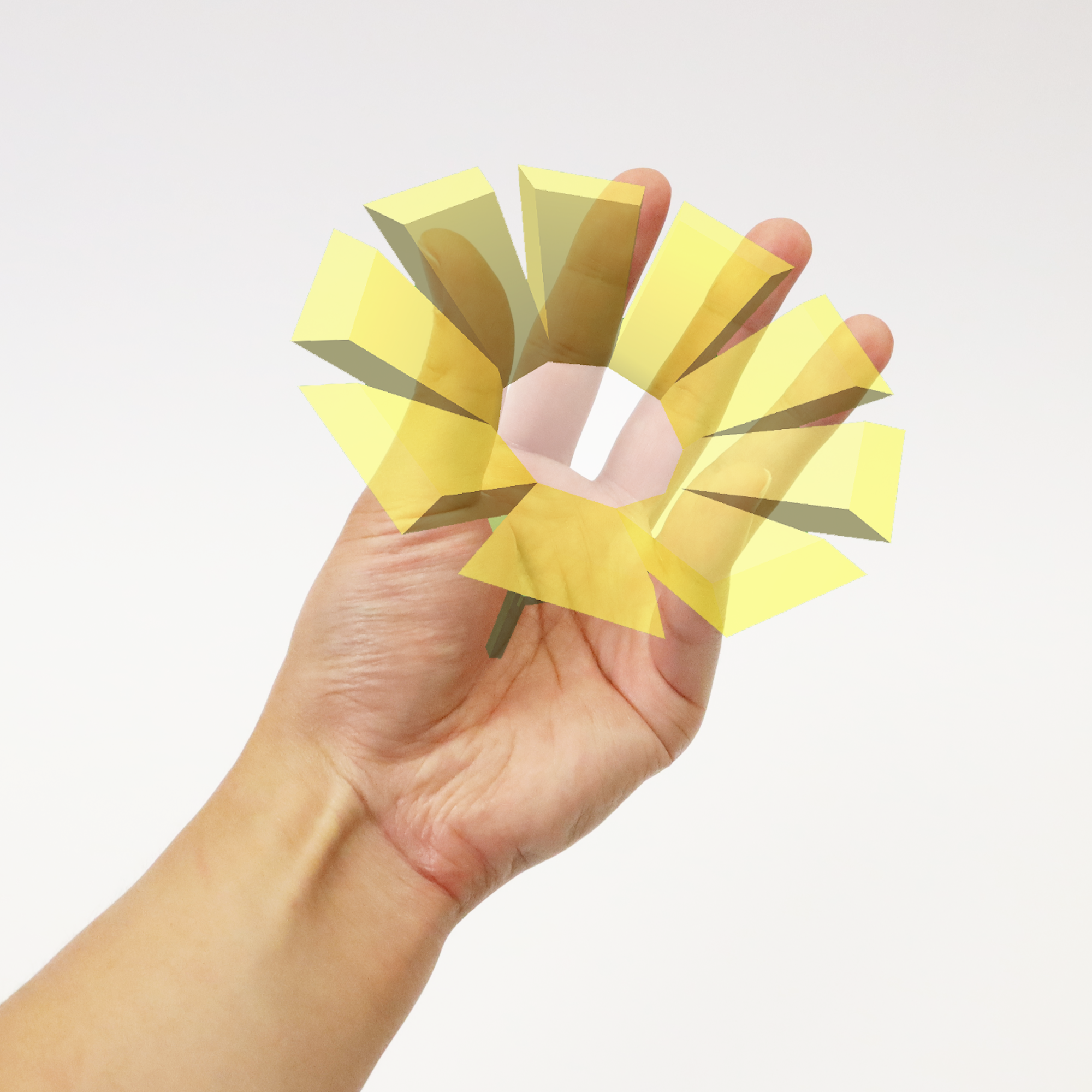

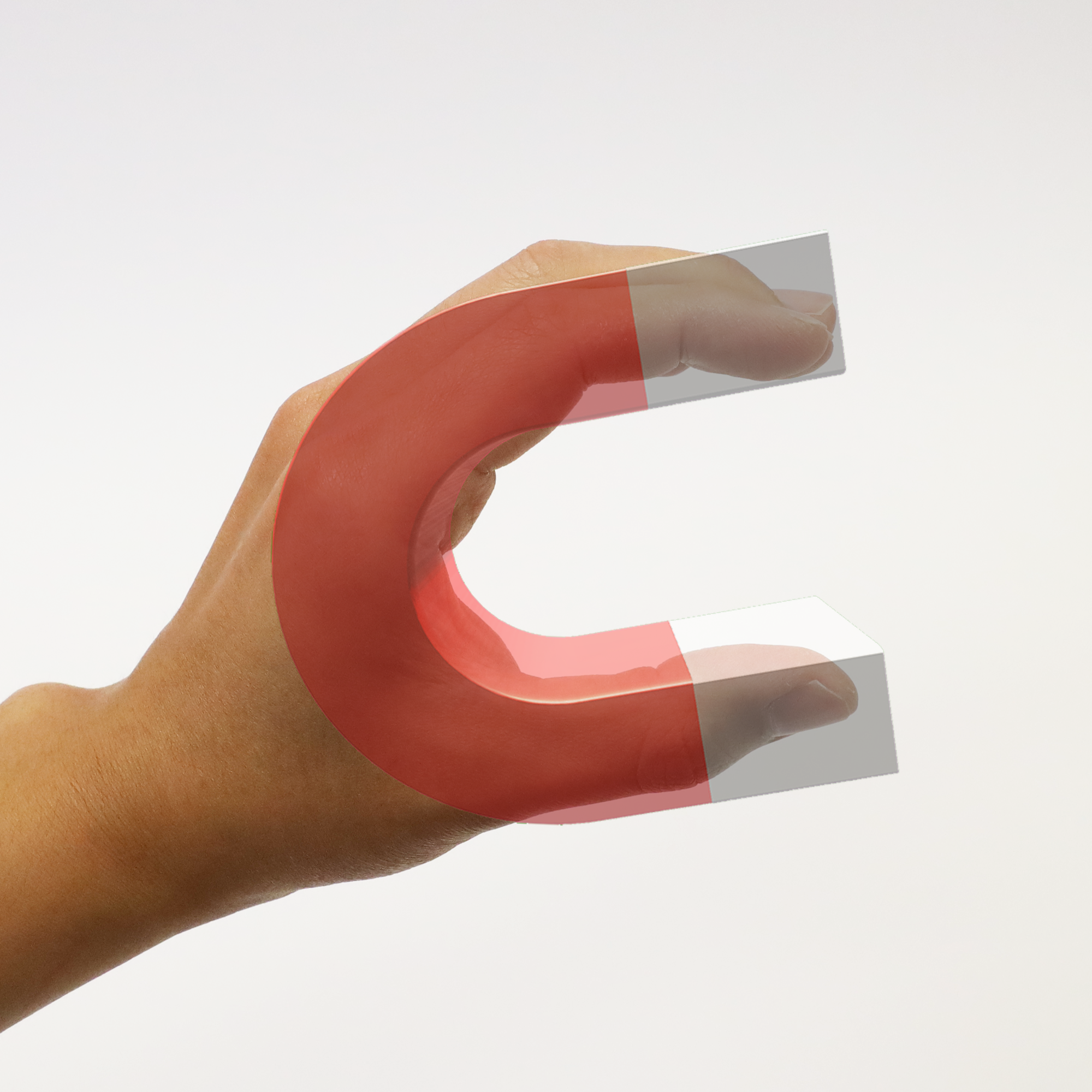

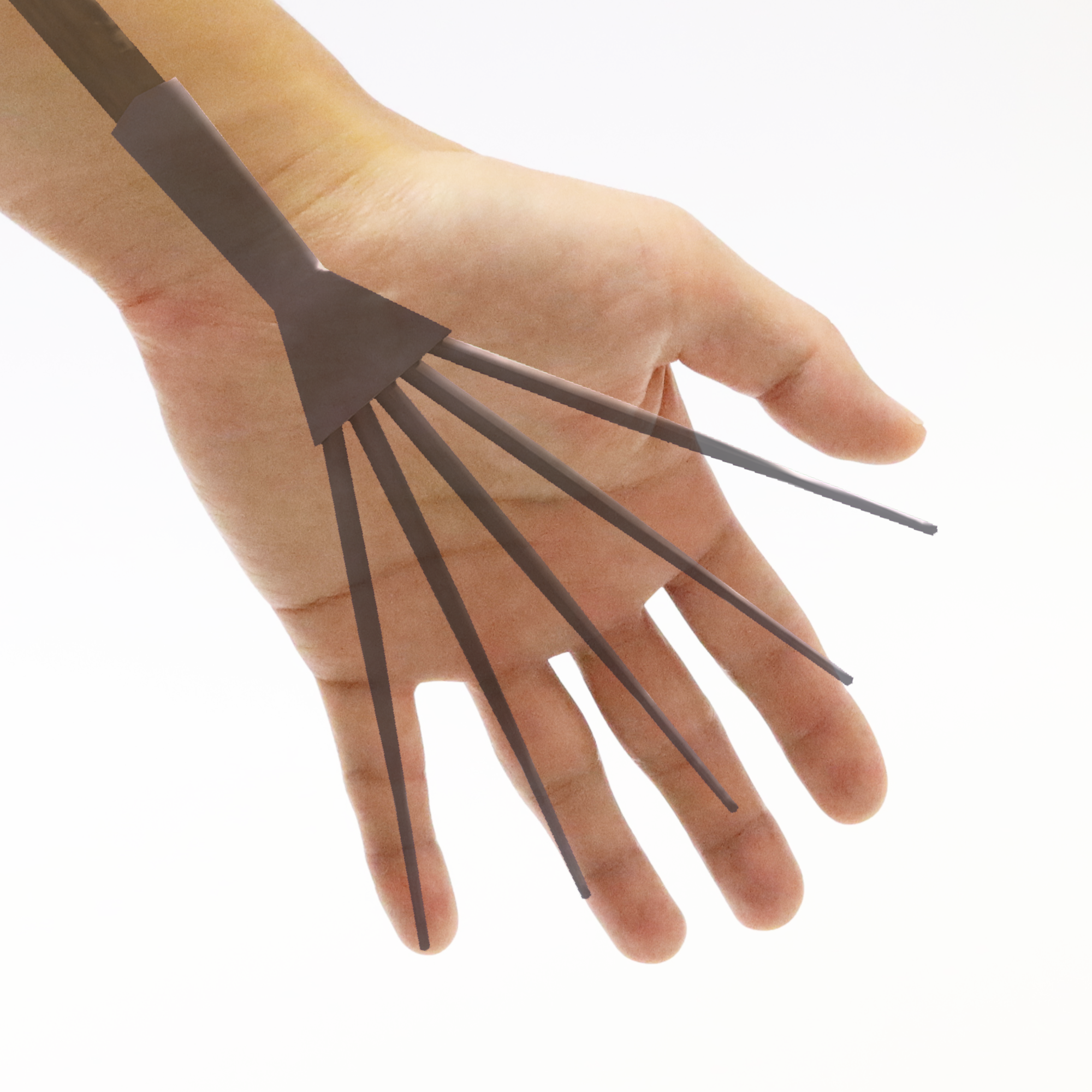

Eventually, our design process yielded 28 unique Hand Interfaces shown in the gallery below. On a high level, these designs can be categorized by number of hands involved. 22 designs involved only one hand (unimanual) and the rest involved two hands (bimanual). Specifically, 10 out of 22 one-hand designs required manipulations from the other hand. Most of these designs were self-revealing. For example, to use the Ladle and the Fork, users would perform the same interaction as they would in reality. Similarly, designs that involved both hands were intuitive as well. For example, the Binoculars could be raised up close to users' eyes to transition their view from normal to long-range. A tad more complicated were single-hand designs that involved the other hand for manipulations, which we describe below:

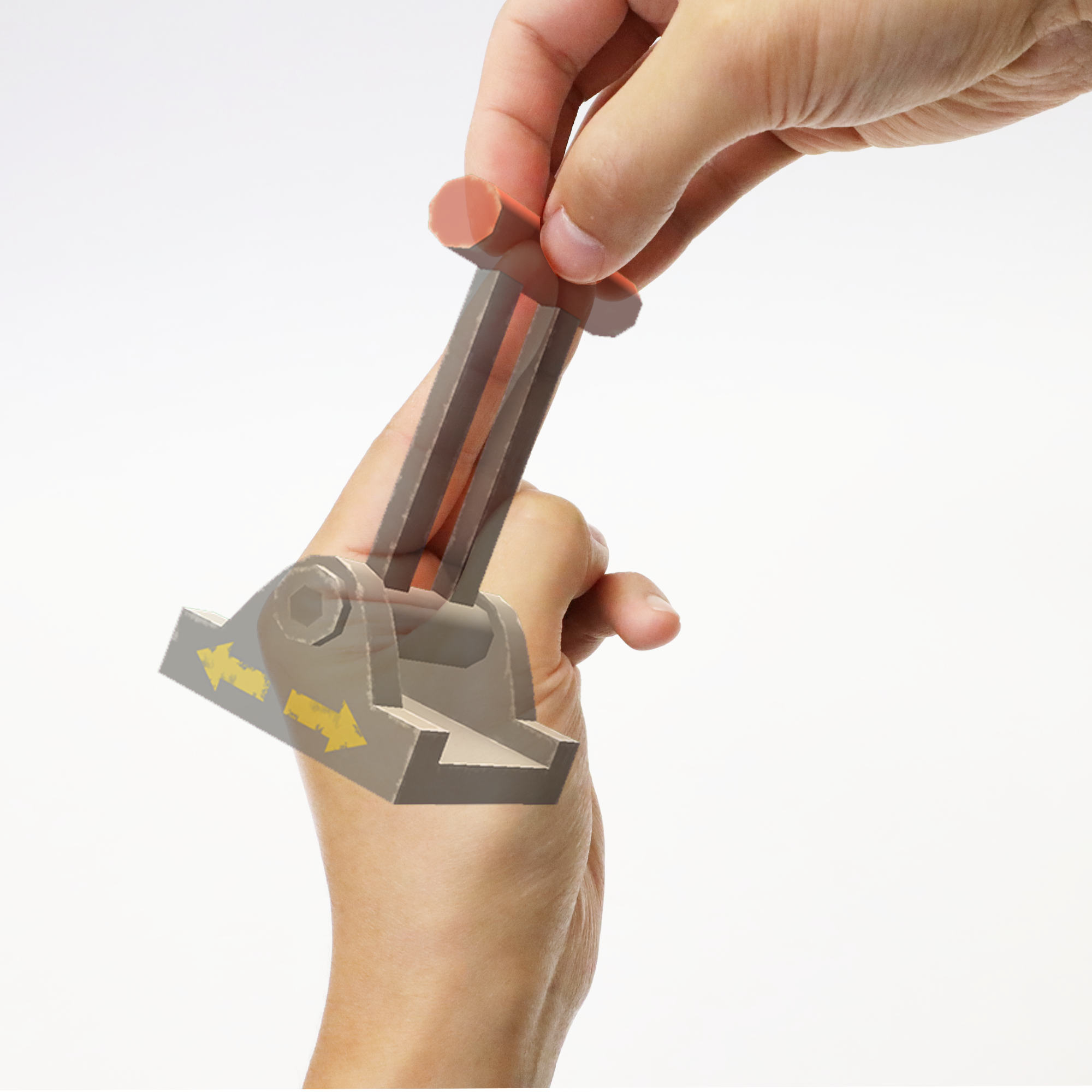

- Joystick - Required users to grasp the thumb of the imitating hand (the stick of the joystick) and move it around to control something, e.g. the direction of a game character.

- Fishing rod - Imitated by pointing the thumb horizontally to one side as the fishing reel and extending the other fingers as the rod body. By rotating the thumb with another hand, users were able to wind up a fishing line to reel in their bait.

- Kalimba - Also known as a "thumb piano", turned the four fingers (index, middle, ring, pinky) of the imitating hand into four piano keys. Users could tap their fingers to tap virtual piano keys of the kalimba and create a simple melody.

- Inflator - Also known as a "manual air-pump", imitated by a spider-man hand gesture. By squeezing the index and the pinky fingers towards each other, users were able to compress the inflator and use it to inflate virtual balloons.

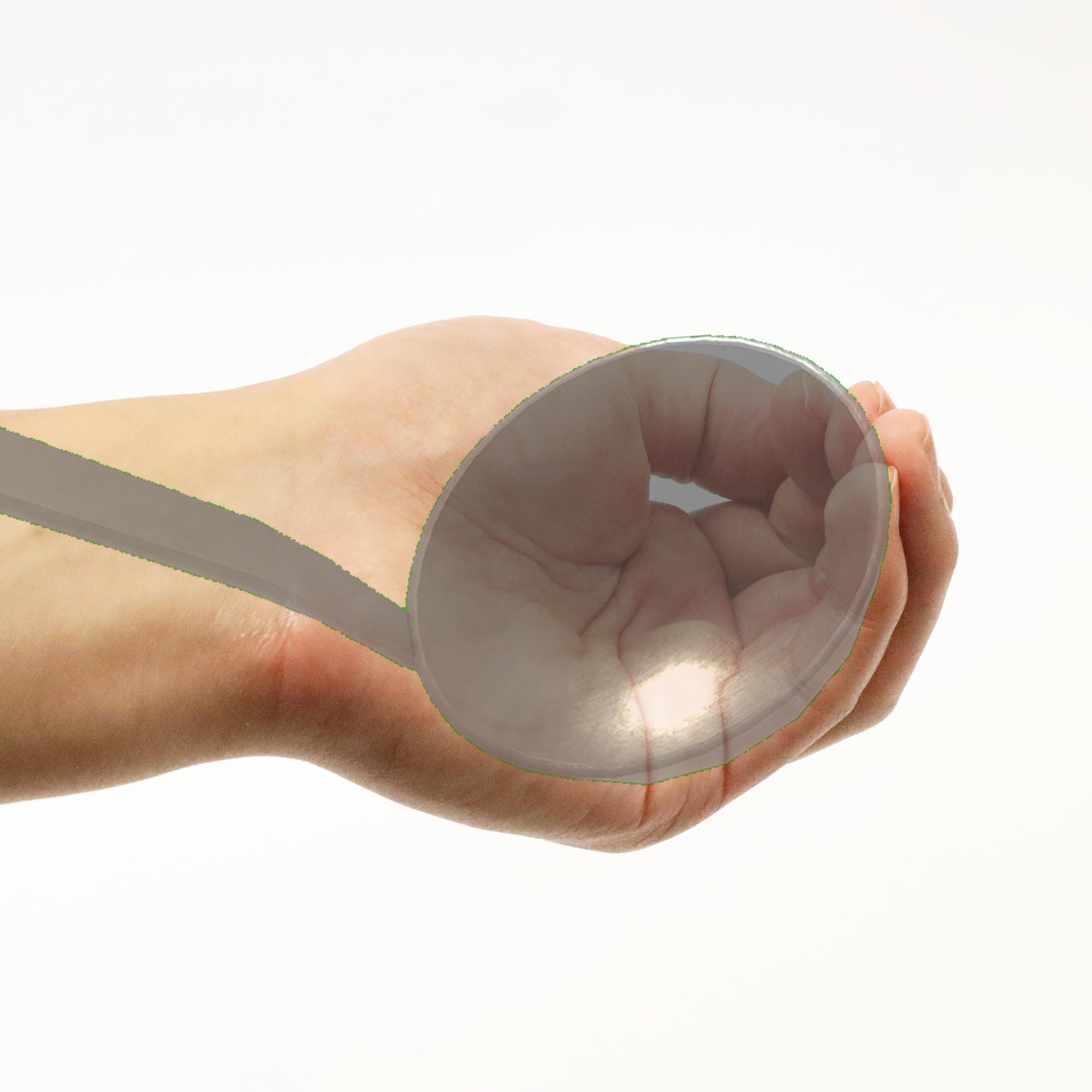

- Globe - Essentially a sphere imitated by a fist. Users could interact with the globe by touching the fist with the index finger of the manipulating hand. Once users clicked on the globe, an enlarged map of the touched location would be displayed.

- Trumpet - Imitated by a fist with an extended pinky finger. The pinky finger of the imitating hand represented the bell-like shape of the trumpet and the other fingers represented the trumpet body with each joint imitating a valve.

- Toggle switch - A switch rendered on the first joint of the index finger of the imitating hand. A user could then click on the joint to perform interactions, e.g., turn on/off the switch to toggle virtual lights.

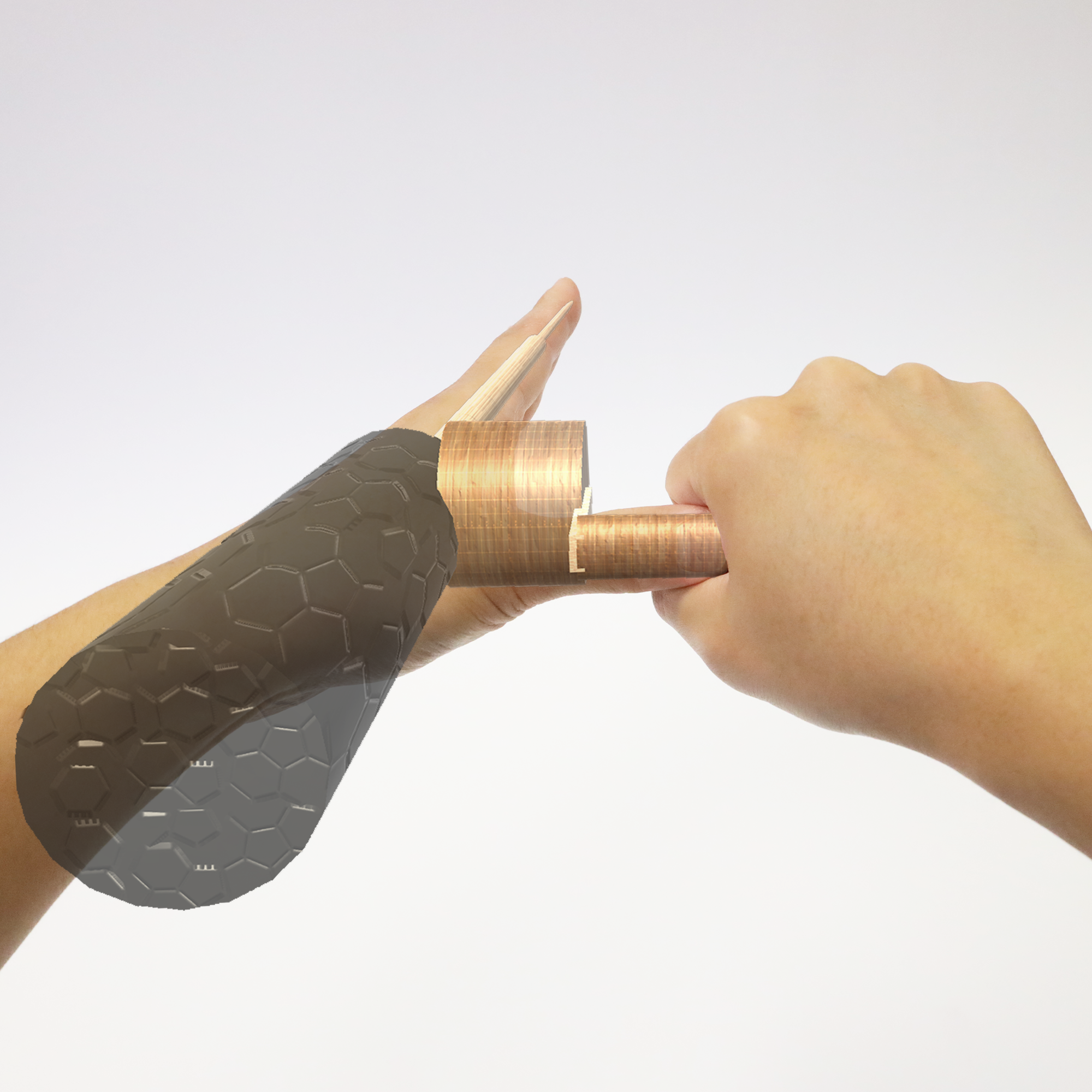

- Spray can - Represented by a hook-like hand gesture where the index finger imitated the nozzle and the other fingers acted like the spray can body. By pressing and holding the index finger of the imitating hand, users were able to spray paint in the air and create 3D artwork.

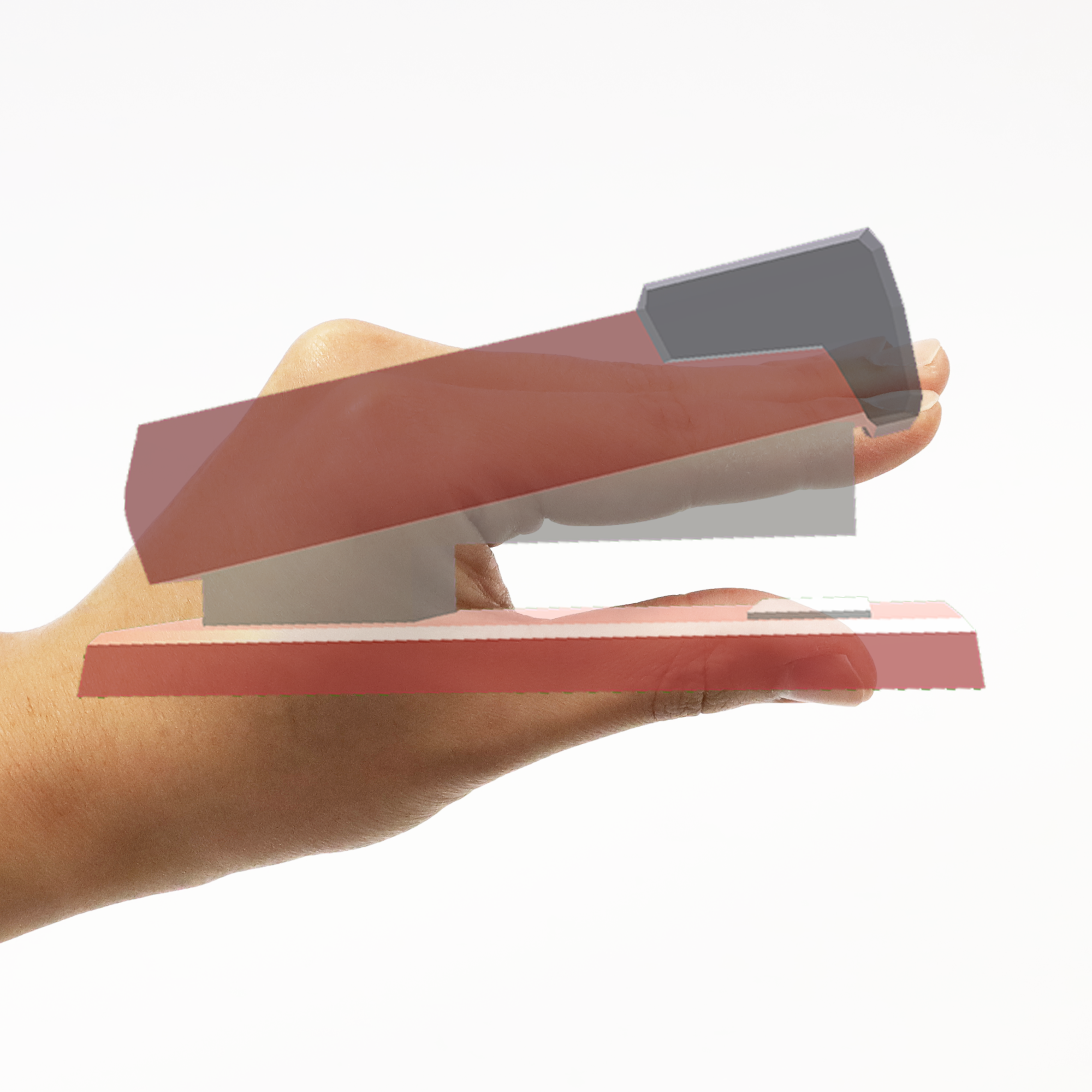

- Stapler - Consisted of a base imitated by extending the thumb and a handle imitated by extending the other fingers. Users could bind virtual documents with it by pushing down the handle to the base with the other hand.

For a comprehensive demonstration of these Hand Interfaces in action, please refer to our Demo Video. Inspired by VirtualGrasp, we found Hand Interfaces also useful in object retrieval. Specifically, users can perform a hand pose to retrieve the corresponding virtual object for further interactivity. We have implemented a detection pipeline using a commercially available VR headset (i.e., Oculus Quest) to demonstrate the feasibility of Hand Interfaces. Please refer to the Implementation section for further details on the pipeline.

Our Designs

As stated in the Design Rationale, we implemented 28 unique designs with both unimanual and bimanual representation. Each of the designs can be seen in the following gallery.

Discussion

Overall, we believe Hand Interfaces introduce a new way to interact with the virtual world. Existing interaction techniques focus on leveraging design ideas that people have experience of. For example, the idea behind Drop-down Menu (i.e., the baseline technique in our study) is the same as the GUIs on conventional computer platforms. It leverages user experience of using these digital interfaces. Meanwhile, direct Virtual Manipulation (e.g., VirtualGrasp) opens up a new direction, which attempts to reproduce user experience of manipulating physical objects in reality (i.e., physical interfaces). Extending user experience from conventional interfaces (i.e., digital and physical) to 3D virtual worlds utilizes prior knowledge that improves learnability, but it might miss the large design space AR/VR uniquely provides --- Hand Interfaces do not primarily attempt to leverage users' real-world experience, but instead creates a new interaction modality specific to AR/VR by allowing users to have their hands become these objects through imitation.